Introducing GPUImage 2, redesigned in Swift

Back in 2010, I gave a talk about the use of OpenGL shaders to accelerate image and video processing on mobile devices. The response from that talk was strong enough that two years later I started work on the open source framework GPUImage with the goal of making this kind of processing more accessible to developers. In an attempt to broaden the reach of this framework, today I'm introducing the completely-rewritten-in-Swift GPUImage 2 with support for Mac, iOS, and now Linux. This isn't just a port, it's a complete rewrite of the framework.

I'm still shocked at the popularity of GPUImage, which right now is the fourth-most-starred Objective-C repository on GitHub. I built GPUImage as a means of making it easier to use OpenGL ES shaders to do GPU-accelerated processing of live camera video. It started as a project with the ability to process video from a camera on an iOS device and display it to the screen, and it came with only a single template for a filter. In the years since, it's grown to support movies, images, OpenGL textures, raw binary data, over 100 operations, and the Mac.

Before I talk about the new version, I'd like to thank everyone who helped make the project what it is today, whether that was through pull requests adding new features or fixes, feedback that pointed out what was needed or broken, or just the many kind words I've heard in the last few years. Again, the response to this little hobby project of mine has never failed to surprise. Many of the contributed fixes and improvements have been rolled into this new framework.

Why rewrite in Swift

My end goal with GPUImage has been to use it for GPU-accelerated machine vision. There are many applications for machine vision on iOS devices and Macs just waiting for the right tools to come along, but there are even more in areas that Apple's hardware doesn't currently reach. Economies of scale around smartphone hardware have made it possible to build tiny yet capable single-board computers for under $40. I believe that embedded Linux board computers like the Raspberry Pi will have a significant impact in the years to come, particularly as the GPUs within them become more powerful.

That's why the day that Swift was released as open source, with support for Linux as a target, I started planning out how I could bring GPUImage over to Swift. I've written before about why our company decided to rewrite our robotics software in Swift and what we learned from the experience. That process has continued to pay dividends for us months later, leading to an accelerated pace of development while greatly reducing the number of bugs in our software. I had reason to believe that GPUImage will benefit from this, too.

Therefore, I started fresh and built an entirely new project in Swift. The result is available on GitHub. I should caution upfront that this version of the framework is not yet ready for production, as I still need to implement, fix, or test many areas. Almost all operations are working, but some inputs / outputs are nonfunctional and several customization options are missing. Consider this a technology preview.

It may seem a little odd for me to call this GPUImage 2. After all, I strongly resisted any attempts to add a version to the original GPUImage, and only tagged it with a 0.x.x development version to help those who wanted this on Cocoapods. However, this Swift rewrite dramatically changes the interface, drops compatibility with older OS versions, and is not intended to be used with Objective-C applications. The older version of the framework will remain up to maintain that support, and I needed a way to distinguish between questions and issues involving the rewritten framework and those about the Objective-C one.

Here are some statistics on the new version of the framework vs. the old:

| GPUImage Version | Files | Lines of Code |

|---|---|---|

| Objective-C (without shaders) | 359 | 20107 |

| Swift (without shaders) | 157 | 4549 |

| Shaders | 233 | 6670 |

The rewritten Swift version of the framework, despite doing everything the Objective-C version does*, only uses 4549 lines of non-shader code vs. the 20107 lines of code before (shaders were copied straight across between the two). That's only 22% the size. That reduction in size is due to a radical internal reorganization which makes it far easier to build and define custom filters and other processing operations. For example, take a look at the difference between the old GPUImageSoftEleganceFilter (don't forget the interface) and the new SoftElegance operation. They do the same thing, yet one is 62 lines long and the other 20. The setup for the new one is much easier to read, as a result.

* (OK, with just a few nonfunctional parts. See the bottom of this page.)

The Swift framework has also been made easier to work with. Clear and simple platform-independent data types (Position, Size, Color, etc.) are used to interact with the framework, and you get safe arrays of values from callbacks, rather than raw pointers. Optionals are used to enable and disable overrides, and enums make values like image orientations easy to follow. Chaining of filters is now easier to read using the new --> operator:

camera --> filter1 --> filter2 --> view

Compare that to the previous use of addTarget(), and you can see how this new syntax is easier to follow:

camera.addTarget(filter1) filter1.addTarget(filter2) filter2.addTarget(view)

I'm very happy with the result. In fact, I most likely will be scaling back my work on the Objective-C version, because the Swift one better serves my needs. The old version will remain for legacy support, but my new development efforts will be focused at GPUImage 2.

GPUImage on Linux

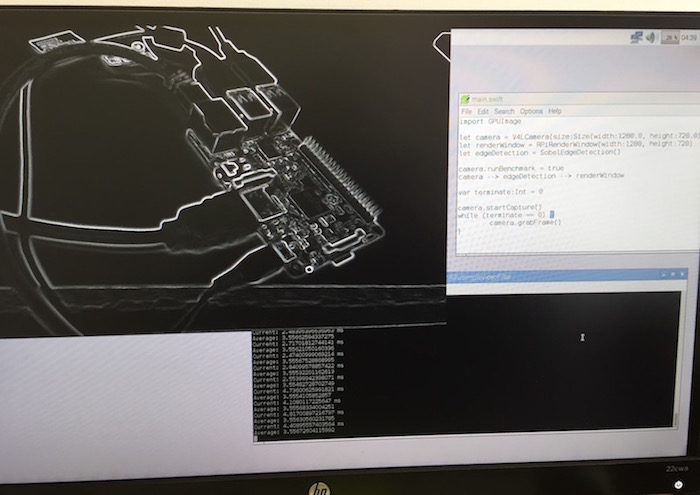

So it's cleaner and easier to work with, but what about that new Linux support? GPUImage now supports Linux as a target platform, using both OpenGL and OpenGL ES. I've added special support for the Raspberry Pi and its direct rendering to the screen via its Broadcom GPU. Here's the Raspberry Pi 3 rendering live Sobel edge detection from an incoming camera at 720p at 30 FPS:

This is the entirety of the code for the application that does the above:

import GPUImage

let camera = V4LCamera(size:Size(width:1280.0, height:720.0))

let renderWindow = RPiRenderWindow(width:1280, height:720)

let edgeDetection = SobelEdgeDetection()

camera --> edgeDetection --> renderWindow

camera.startCapture()

while (true) {

camera.grabFrame()

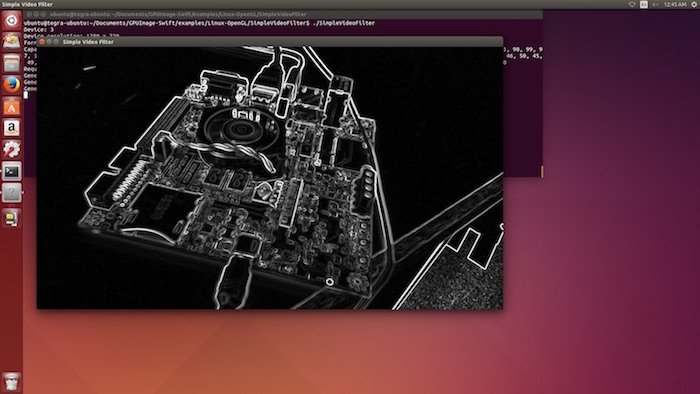

}Likewise, here's Sobel edge detection of live video in desktop OpenGL on an Nvidia Jetson TK1 development board (which has a surprisingly powerful GPU in a ~$190 board computer):

Video input is provided via the Video4Linux API, so any camera compatible with that should work with the framework. As mentioned, OpenGL ES (as used in the Raspberry Pi) and desktop OpenGL APIs are supported via GLUT. Right now, I don't have still image or movie input and output working, as I need to find the right libraries to use for those.

Building for Linux is a bit of a pain at the moment, since the Swift Package Manager isn't completely operational on ARM Linux devices, and even where it is I don't quite have the framework compatible with it yet. Due to the number of targets supported by the framework, and all the platform-specific code that has to be filtered out, I haven't gotten the right package structure in place yet. That's something I'm actively working on, though, because I want this framework to be as easy to incorporate as typing "swift build".

In the meantime, I've provided a couple of crude build scripts in the /framework and /examples directories to build the framework and the Linux sample applications by hand. Detailed installation and usage instructions are provided in the project's Readme.md following the steps I used to set up my Raspberry Pis and other Linux setups.

Even with the early hassles, it's stunning to see the same high-performance image processing code written for Mac or iOS running unmodified on a Linux desktop or an embedded computing board. I look forward to the day when all it takes are are few "apt-get"s to pull the right packages, a few lines of code, and a "swift build" to get up and running with GPU-accelerated machine vision on a Raspberry Pi. This may even finally answer the many, many people who have asked over the years for an Android port of the framework.

What's new

I couldn't pass up the opportunity to add some new features to the framework. Some of these have been requested for years.

Filters can now be applied to arbitrary shapes on an image. This works for almost every filter in the framework. You supply an alpha mask to the mask property of the filter. The areas of this mask that are opaque will have the filter applied to them, while the original image will be used for areas that are transparent. This mask can come from a still image, a shape generator operation (new with this version), or even the output from another filter.

Filters that were created solely to do this, such as the GPUImageGaussianSelectiveBlurFilter, are no longer needed and have been removed.

Crop sizes are now in pixels. While I like to use normalized coordinates throughout the framework, it was a real pain to crop images to a specific size that came from a video source. Typically you'd only know the size of the frames (and thus how to normalize your target size) after the video started playing. As a result, crops can now be defined in pixels and the normalized size is calculated internally.

Gaussian and box blurs now automatically downsample and upsample for performance. When I built the Gaussian blur mechanism in GPUImage, I was very surprised at the benchmarked performance of Core Image for large blur radii. I believe that this performance was achieved by downsampling the image, blurring at a smaller radius, and then upsampling the blurred image. The results from this very closely simulate large blur radii, and this is much, much faster than direct application of a large blur. Therefore, this is now enabled by default in many of my blurs, which should lead to huge performance boosts in many situations. For example, an iPhone 4S now renders a 720p frame with a blur radius of 40 in under 7 ms, where before it would take well over 150 ms.

What's changed

Because this version requires Swift, and is packaged as a module, the OS version compatibility has been reduced. Here are the version requirements:

- iOS: 8.0 or higher (Swift is supported on 7.0, but not Mac-style frameworks)

- OSX: 10.9 or higher

- Linux: Wherever Swift code can be compiled. Currently, that's Ubuntu 14.04 or higher, along with the many other places it has been ported to. I've gotten this running on the latest Raspbian, for example.

One of the first things you may notice is that naming has been changed across the framework. Without the need for elaborate naming conventions to avoid namespace collisions, I've simplified the names of types throughout the framework. A GPUImageCamera is now just a camera. GPUImageViews are RenderViews. I've taken to calling anything that takes in or generates an image an operation, rather than a filter, since the framework now does so much more than just filter images. As a result, the -Filter suffix has been removed from many types.

The framework uses platform-independent types where possible: Size, Color, and Float instead of CGSize, UIColor / NSColor, and CGFloat / GLfloat. This makes it easier to maintain code between Mac, iOS, and Linux. In callback functions, you now get defined arrays of these types instead of raw, unmanaged pointers to bytes. That will make it both easier and safer to work with these callbacks.

Optionals now make working with overrides for size, etc. much clearer.

Protocols are used to define types that take in images, produce images, and do both. This is part of a larger shift in architecture from code reuse via inheritance to code reuse via composition. While operations, inputs, and outputs are still classes (I wanted to use reference types to wrap hardware resources), levels of inheritance have been removed on favor of commonly used free functions or the much more capable ShaderProgram and Framebuffer internal types.

For example, you no longer need to create custom subclasses for filters that take in different numbers of inputs (GPUImageTwoInputFilter and friends). The primary BasicOperation class lets you specify how many inputs you want (1-8), and it does the rest for you. This both cuts down on a ton of code, it also avoids awkward subclasses that didn't make much sense. You just need to provide your shaders and you're good.

Speaking of shaders, those are now handled in a very different way. In the old Objective-C code, I inlined the shader code within the filter classes themselves via a compiler macro. Such compiler macros are no longer supported in Swift, and Swift doesn't have a means for easy multiline string constants of the style that I'd need for copying-and-pasting shaders, so I've devised a different way to handle this.

Now, all shaders for all operations reside in a special Operations/Shaders subdirectory within the framework. Fragment shaders have a .fsh extension and vertex shaders have a .vsh one. For shaders that are specific to OpenGL, the suffix _GL is used, and for OpenGL ES, _GLES. In that subdirectory is a Swift script that when run parses all of these shader files and generates two Swift files that inline as named string constants all of the shader code specific to the OpenGL API and the OpenGL ES API.

Whenever you modify a shader or add a new one, you need to run

./ShaderConverter.sh *

in that directory for your shader to be re-parsed and included in the Swift code. As some point, I'll do something to automate this scripting as part of the build process in a way that doesn't impact build times too much. For now, you have to run this by hand when you change shaders.

While slightly less convenient than before, this now means that shaders get full GLSL syntax highlighting in Xcode and this makes it a lot easier to define single-purpose steps in image processing routines without creating a bunch of unnecessary custom subclasses.

The underlying shader processing code is also much more intelligent, so you no longer need to explicitly attach attributes and uniforms in code, this is all handled for you. Swift's type system and method overloading now let you set a supported type value to a uniform's text name and the framework will convert to the correct OpenGL types and functions for attachment to the uniform.

Groups are now much easier to define. The OperationGroup class now defines a simple function that's used to set up its internal structure:

self.configureGroup{input, output in

input --> self.unsharpMask

input --> self.gaussianBlur --> self.unsharpMask --> output

}which makes it easy to build even complex multistep operations. No more confusion about inputs and outputs from the group, or ordering of targets. One of the things I've been surprised with in GPUImage over the years is how chaining together simple building blocks brings even very complex operations within your reach, and this should help with that.

Finally, I've changed the way that images are extracted from the framework. Before, you could pull images from any operation at any point. This caused problems with the framebuffer caching mechanism I introduced to dramatically reduce memory usage within the framework. I could never work out all the race conditions involving these transient framebuffers, so I've switched to using defined output classes for extraction of images. If you wish to pull images from the framework, you now need to target the filter you're capturing from to a PictureOutput or RawDataOutput instance. Those classes have asynchronous callbacks to grab and handle image capture in a reliable manner.

What's missing

As mentioned above, I would not recommend using this for production right now until I stabilize the codebase and add the last few features back in. Among the things that are missing from this release:

- Still photo camera capture

- Movie playback and recording

- Audio recording

- Stable GCD integration, so most things are done on the main thread, not multithreaded

- Texture cache acceleration for fast image and raw data extraction off of the GPU

- Many of the sample applications are missing (raw data handling, object tracking, photo capture)

- UI element capture

- Image and view resizing or reorientation after setup of the processing chain

- This thing probably leaks memory like a sieve

Equivalents of the following GPUImage filters are also missing right now:

- GPUImagePerlinNoiseFilter.h

- GPUImageHoughTransformLineDetector.h

- GPUImageToneCurveFilter.h

- GPUImageHistogramFilter.h

- GPUImageHistogramEqualizationFilter.h

- GPUImageMosaicFilter.h

- GPUImagePoissonBlendFilter.h

- GPUImageJFAVoronoiFilter.h

- GPUImageVoronoiConsumerFilter.h

- GPUImageHSBFilter.h

On Linux, the input and output options are fairly restricted until I settle on a good replacement for Cocoa's image and movie handling capabilities. I may add external modules to add support for things like FFmpeg.

Final words

My goal is that eventually people will find this framework to be more capable, more reliable, and more extensible than the Objective-C version, while also being easier to work with.