GPU-accelerated video processing on Mac and iOS

I've been invited to give a talk at the SecondConf developer conference in Chicago, and I'm writing this to accompany it. I'll be talking about using the GPU to accelerate processing of video on Mac and iOS. The slides for this talk are available here. The source code samples used in this talk will be linked throughout this article. Additionally, I'll refer to the course I teach on advanced iPhone development, which can be found on iTunes U here.

UPDATE (7/12/2011): My talk from SecondConf on this topic can now be downloaded from the conference videos page here.

UPDATE (2/13/2012): Based on this example, I've now created the open source GPUImage framework which encapsulates these GPU-accelerated image processing tasks. Read more about the framework in my announcement, and grab its code on GitHub. This framework makes it a lot easier to incorporate these custom filter effects in an iOS application.

Processing video on the GPU

As of iOS 4.0, we now have access to the raw video data coming in from the built-in camera(s). The Mac has had this capability for long while, but the mobility of iOS devices opens up significant opportunities to process and understand the world around us.

However, dealing with images coming at an application at up to 60 frames per second can strain even the most powerful processor. This is particularly the case with resource-constrained mobile devices. Fortunately, many of these devices contain processors ideally suited to this task in their GPUs.

GPUs are built to perform parallel processing tasks on large sets of data. For a while, desktop computers have had GPUs that can be programmed using simple C-like shaders to produce fascinating effects. These same shaders let you also run arbitrary calculations against data input to the GPU. With more and more OpenGL ES 2.0 compatible GPUs being bundled into mobile devices, this kind of GPU-based computation is now possible on handhelds.

On the Mac, we have three technologies for performing work on the GPU: OpenGL, Core Image, and OpenCL. Core Image, as its name indicates, is geared toward processing images to create unique effects in a hardware-accelerated manner. OpenCL is a new technology in Snow Leopard that takes the use of GPUs as computational devices to its logical extreme by defining a way of easily writing C-like programs that can process loads of various types of data on the GPU, CPU, or both.

On iOS, we just have OpenGL ES 2.0, which makes these kind of calculations more difficult than OpenCL or Core Image, but they are still possible through programmable shaders.

Why would we want to go through the effort of building custom code to upload, process, and extract data from the GPU? I ran some benchmarks on my iPhone 4 where I performed a simple calculation (identifying a particular color, with a certain threshold) for various times within a loop against every pixel in a 480 x 320 image. This was done using a simple C implementation that ran on the CPU, and a programmable shader that ran on the GPU. The results are as follows:

| Calculation | GPU FPS | CPU FPS | Speedup |

|---|---|---|---|

| Thresholding x 100 | 1.45 | 0.05 | 28.7X |

| Thresholding x 2 | 33.63 | 2.36 | 14.3X |

| Thresholding x 1 | 60.00 | 4.21 | 14.3X |

As you can see, the GPU handily beats the CPU in every case on these benchmarks. These benchmarks were run multiple times, with the average of those results presented here (there was reasonably little noise in the data). On the iPhone 4, you look to gain an approximate 14X - 29X speedup by running a simple calculation like this across a lot of data. This makes it practical to implement object recognition and other video processing tasks on mobile devices.

Using Quartz Composer for rapid prototyping

It can be difficult to design applications that use the GPU, because there generally is a lot of code required just to set up the interaction with Core Image, OpenCL, or OpenGL, not to mention any video sources you have coming in to the application. While much of this code may be boilerplate, it still is a hassle to build up a full application to test out the practicality of an idea or even just to experiment with what is possible on the GPU.

That's where Quartz Composer can make your life a lot easier. Quartz Composer is a development tool that doesn't get much attention, despite the power that resides within the application. The one group it seems to have traction with are VJs, due to the very cool effects you can generate quickly based on audio and video sources.

However, I think that Quartz Composer is an ideal tool for rapid prototyping of Core Image filters, GLSL programmable shaders, and OpenCL kernels. To test one of these GPU-based elements, all you need to do is drag in the appropriate inputs and outputs, drop in your kernel code in an appropriate patch, and the results will be displayed onscreen. You can then edit the code for your filter, kernel, or shader and see what the results look like in realtime. There's no compile, run, debug cycle in any of this.

This can save you a tremendous amount of time by letting you focus on the core technical element you're developing and not any of the supporting code that surrounds it. Even though it is a Mac-based tool, you can just as easily use this to speed up development of iPhone- and iPad-based OpenGL ES applications by testing out your custom shaders here in 2-D or 3-D.

For more on Quartz Composer, I recommend reading Apple's "Working with Quartz Composer" tutorial and their Quartz Composer User Guide, as well as visiting the great community resources at Kineme.net and QuartzComposer.com

Video denoising

As a case study for how useful Quartz Composer can be in development, I'd like to describe some work we've been doing lately at SonoPlot. We build robotic systems there that act like pen plotters on the microscale and are useful for printing microelectronics, novel materials, and biological molecules like DNA and proteins. Our systems have integrated CCD cameras that peer through high-magnification lenses to track the printing process.

These cameras can sometimes need to be run at very high gains, which introduces CCD speckle noise. This noise reduces the clarity of the video being received, and can make recorded MPEG4 videos look terrible. Therefore, we wanted to try performing some kind of filtering on the input video to reduce this noise. We just didn't know which approach would work best, or even if this was worth doing.

We used Quartz Composer to rig up an input source from our industrial-grade IIDC-compliant FireWire cameras through multiple Core Image filters, then output the results to the screen to test filter approaches. You can download that composition from here to try out for yourself, if you have a compatible camera.

The denoise filtering Quartz Composition will require you to have two custom plugins installed on your system, both provided by Kineme.net: VideoTools and Structure Maker. VideoTools lets you work with FireWire IIDC cameras using the libdc1394 library, as well as set their parameters like gain, exposure, etc. If you wish to just work with the built-in iSight cameras, you can delete the Kineme Video Input patch in these compositions and drop in the standard Video Input in its place.

From this, we were able to determine that a Core Image low-pass filter with a specific filter strength was the best solution for quickly removing much of this speckle, and now can proceed to implementing that within our application. By testing this out first in Quartz Composer, not only were we able to save ourselves a lot of time, but we now know that this approach will work and we have confidence in what we're building.

Color-based object tracking

A while ago, I had watched the presentation given by Ralph Brunner at WWDC 2007 ("Create Stunning Effects with Core Image") and was impressed by the demonstration he provided of doing object tracking in a video that was keyed off of the object's color. That demo turned into the CIColorTracking sample application, as well as a chapter in the GPU Gems 3 book which is available for free online.

When I saw that iOS 4.0 gave us the ability to handle the direct video frames being returned from the iPhone's (and now iPod touch's) camera, I wondered if it would be possible to do this same sort of object tracking on the iPhone. It turns out that the iPhone 4 can do this at 60 frames per second by using OpenGL ES 2.0, but I didn't know that at the time. Therefore, I wanted to test this idea out.

![]()

The first thing I did was create a Quartz Composer composition that used the Core Image filters from their example. I wanted to understand how the filters interacted and play with them to see how they worked. That composition can be downloaded from here.

The way this example works is that you first supply a color that you'd like to track, and a threshold for the sensitivity of detecting that color. You want to adjust that sensitivity so that you're picking up the various shades of that color in your object, but not unrelated colors in the environment.

The first Core Image filter takes your source image and goes through each pixel in that image, determining whether that pixel is close enough to the target color, within the threshold specified. Both the pixel and target colors are normalized (red, green, blue channels added, divided by three, then the whole color divided by that amount) to attempt to remove the effect of varying lighting on colors. If a pixel is within the threshold to the target color, it is replaced with a RGBA value of (1.0, 1.0, 1.0, 1.0). Otherwise, it is replaced with (0.0, 0.0, 0.0, 0.0).

The second filter takes the thresholded pixels and multiplies their values with the normalized X,Y coordinates (0.0 - 1.0) to store the coordinate of each detected pixel in that pixel's color.

Finally, the colors are averaged across all pixels, then adjusted by the percentage of pixels that are transparent (ones that failed the threshold test). A single pixel is returned from this, with a color that has the centroid of the thresholded area stored in its red and green colors, and the relative size of the area in its blue and alpha color components.

The iPhone lacks Core Image, but it does have the technology originally used as the underpinning for Core Image, OpenGL. Specifically, all newer iOS devices have support for OpenGL ES 2.0 and programmable shaders. As I described earlier, shaders are written using the OpenGL Shading Language (GLSL) and they instruct the GPU to process 2-D and 3-D data to render onscreen or in a framebuffer.

Therefore, I needed to port the Core Image kernel code from Apple's example to GLSL shaders. Again, I decided to use Quartz Composer for the design portion of this. The resulting composition can be downloaded from here.

Porting the filters over turned out to be pretty straightforward. Core Image and GLSL have very similar syntaxes, with only a few helper functions and language features that differ.

Object tracking on iPhone

The final step was taking this GLSL-based implementation and building an application around it. The source code for that application can be downloaded from here. Note that this application needs to be build for the device, because the Simulator in the iOS 4.1 SDK seems to be lacking some of the AVFoundation video capture symbols.

![]()

The application has a control at the bottom that lets you switch between four different views: the raw camera feed, a view that overlays the thresholded pixels on the camera feed, a view of the thresholded pixels with position data stored in them, and then a view that shows a tracking dot which follows the color area.

Touching on a color on the image selects that color as the threshold color. Dragging your finger across the display adjusts the threshold sensitivity. You can easily see this on the overlaid threshold view.

The one shortcoming of this application is that I still use a CPU-bound routine to average out the pixels of the final image, so the performance is not what it should be. I'm working on finishing up this part of the application so that it can be GPU-accelerated like the rest of the processing pipeline.

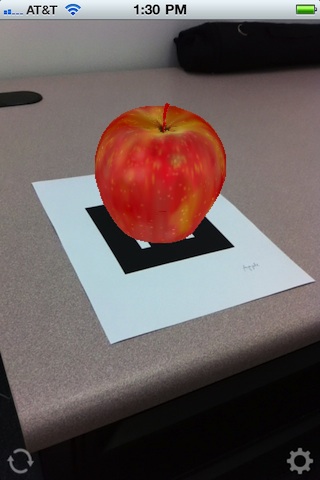

Potential for augmented reality

One potential application for this technology that I'll mention in my talk is in the case of augmented reality. Even though it is not a GPU-accelerated application, I want to point out a great example of this in the VRToolKit sample application by Benjamin Loulier.

This application leverages the open source ARToolKitPlus library developed in the Christian Doppler Laboratory at the Graz University of Technology. ARToolKitPlus looks for 2-D BCH codes in images from a video stream, recognizes those codes, and passes back information about their rotation, size, and position in 3-D space.

The VRToolKit application provides a great demonstration of how this works by overlaying a 3-D model on any of these codes it detects in a video scene, then rotating and scaling these objects in response to movement of these codes. I highly recommend downloading the source code and trying this out yourself. BCH code images that you can print out and use with this application can be found here, here, here, here, and here.

As I mentioned, this library is not hardware accelerated, so you can imagine what is possible with the extra horsepower the GPU could bring to this kind of processing.

Also, this library has a significant downside in that it uses the GPL license, so VRToolKit and all derivative applications built from it also must be released under the GPL.

Overall, I think there are many exciting applications for video processing that GPU acceleration can make practical. I'm anxious to see what people will build with this kind of technology.

Comments

many many thanks!

can you help me with color blending?

I need blend my picture like it doing the photoshop "Color Balance".

with settings like on the screenshot

http://dl.dropbox.com/u/19073449/Screenshots/5.png

Please, or give me way for research, thanks!

Many thanks again!

Hi,

I am not sure if this is the right place to ask for help.

I need help with the default image rotation property of GPUImage. In a simple example, I am reading a video from device library and displaying it using GPUImageMovie Class. The framework automatically rotates to fit aspect in a square GPUImageView. I don't want this to happen. If the videos are in portrait mode then it should be played in portrait mode and if the video is in landscape mode then it should play in landscape mode. Can you please guide me here.

Thanks

Hi.

:)

It's now April 2014 (most postings are two years old)

I require an iPhone IOS7 capability to identify and track (multiple) object(s) such as the tennis ball example.

I'm excited about the demo software.

However, the quartz app is the only one that works.

I have XCODE 5.1.1 downloaded onto my development Mac.

Do you have a more-recent update of a tennis ball example source-code, or similar GPU-accelerated Sample Source Code ??

Thanks.

To be honest, this probably won't work for tracking multiple objects.

If you want a more recent version of this, my GPUImage project, which has a ColorObjectTracking example application in there that is a more modern version of this. I am surprised that this won't build, since all you should need to do is update the deployment target and SDK. I know others have used this as an example application successfully recently.